The roads I take...

KaiRo's weBlog

| Zeige Beiträge veröffentlicht im Juni 2007 und mit "UA String" gekennzeichnet an. Zurück zu allen aktuellen Beiträgen | |||||||||||||||||||||||||||||||||||||||||||

23. Juni 2007

A possible idea for user agents

When I went to bed late last night, I was not completely satisfied with the blog rant I had just written. Not that anything would be wrong with what is in there, I stand by that. But just ranting cannot be the solution - there must be some way to get what Camino people want without making UA strings suck even more.

Up to now, there are several possible ways in which people deal with websites closing out people because of UA strings they "don't recognize":

But then, I realized, all those solutions are so 1990s, so static, so Web 1.0 - and we're all talking in terms of the modern, new, shiny, dynamic Web 2.0 all the time. So maybe that great new world may have a better solution for that problem as well. And actually, I think it really has. I began to think we could just combine all the methods above with some other tooling we have, make everything dynamic, and we have a cool, new approach!

Firefox has this nice feature of preventing phishing through lists of known phishing sites it dynamically updates from the web. Currently, there's a plan to do a very similar thing for completely blocking sites that offer malware in Gecko.

So, what about having a list of sites that need UA spoofing, dynamically maintained by our users, and dynamically downloaded and used by the web browser? That way, we would specifically only spoof our UA (by adding any token the site needs) on specific websites. The list would have the domain name where spoofing is needed along with what sort of spoofing, the client would follow those rules.

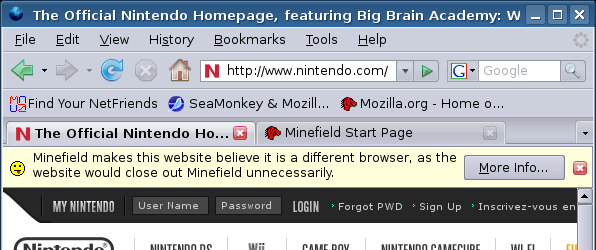

Of course, it's bad to do this automatically without telling the user that we are doing non-standard things - after all, the website might tell the user he is using Firefox even though he's using Camino. But then, there's this nice idea of info bars in the browser, which we could use for giving the user feedback about what's happening:

This is just a graphical mockup of how it would look, of course - I haven't implemented anything.

The "More Info..." button would open a new tab/window (depending on user prefs) with a page that carries all kind of info about our spoofing of UA strings on this website. The user can leave his comments there, find a contact where to nag the webmaster about this problem, etc. Of course, this is a page on our central site that also delivers the dynamic list and where our users can add spoofing for sites, change spoofing options for those sites, and similar things. This should be driven by the community and should combine the reporting system with evangelizing options.

Of course, several points are still open in this concept:

I'm willing to help with bits and pieces where I can, but I'm not a big XUL hacker and I have very little time to work on yet another new project.

It would be very cool to find someone who could implement a system like that.

If we design it well, it can help lots of browsers, not only Minefield, Camino and SeaMonkey, but also any number of others who could implement the same system.

Actually, we may even be able to leverage that system for going back to really short and useful UA strings for all our browsers, including even Firefox. Who knows?

Up to now, there are several possible ways in which people deal with websites closing out people because of UA strings they "don't recognize":

- Extend the UA string with some token they recognize. As said in the other post, this sucks. A lot.

- Evangelizing. Find out what kind of errors the site is making with browser detection, mail the webmasters, tell and help them to improve the situation. That's hard, manual work, often with very little reward, but where it helps, it makes the web better for everyone.

- Selective UA spoofing on the user side. Extensions like PrefBar give you a handy dropdown menu to spoof certain well-known UA strings when accessing websites, and a good way to switch back to the default. This is handy for experts, but normal users don't understand it. Additionally, the accessed websites don't even see your browser in any stats - and there's a high risk that you hide your real browser in more occasions than necessary.

But then, I realized, all those solutions are so 1990s, so static, so Web 1.0 - and we're all talking in terms of the modern, new, shiny, dynamic Web 2.0 all the time. So maybe that great new world may have a better solution for that problem as well. And actually, I think it really has. I began to think we could just combine all the methods above with some other tooling we have, make everything dynamic, and we have a cool, new approach!

Firefox has this nice feature of preventing phishing through lists of known phishing sites it dynamically updates from the web. Currently, there's a plan to do a very similar thing for completely blocking sites that offer malware in Gecko.

So, what about having a list of sites that need UA spoofing, dynamically maintained by our users, and dynamically downloaded and used by the web browser? That way, we would specifically only spoof our UA (by adding any token the site needs) on specific websites. The list would have the domain name where spoofing is needed along with what sort of spoofing, the client would follow those rules.

Of course, it's bad to do this automatically without telling the user that we are doing non-standard things - after all, the website might tell the user he is using Firefox even though he's using Camino. But then, there's this nice idea of info bars in the browser, which we could use for giving the user feedback about what's happening:

This is just a graphical mockup of how it would look, of course - I haven't implemented anything.

The "More Info..." button would open a new tab/window (depending on user prefs) with a page that carries all kind of info about our spoofing of UA strings on this website. The user can leave his comments there, find a contact where to nag the webmaster about this problem, etc. Of course, this is a page on our central site that also delivers the dynamic list and where our users can add spoofing for sites, change spoofing options for those sites, and similar things. This should be driven by the community and should combine the reporting system with evangelizing options.

Of course, several points are still open in this concept:

- The (advanced) user needs a possibility to opt out of spoofing for the site (for testing)

- The (advanced) user needs to be made aware of how to report pages in the first place

- Perhaps the user needs an option to mute the warning for pages he visits very often?

- and lots of others...

I'm willing to help with bits and pieces where I can, but I'm not a big XUL hacker and I have very little time to work on yet another new project.

It would be very cool to find someone who could implement a system like that.

If we design it well, it can help lots of browsers, not only Minefield, Camino and SeaMonkey, but also any number of others who could implement the same system.

Actually, we may even be able to leverage that system for going back to really short and useful UA strings for all our browsers, including even Firefox. Who knows?

Von KaiRo, um 14:00 | Tags: mozconcept, Mozilla, UA String | 8 Kommentare | TrackBack: 0

The fight for the suckiest UA string

Sure, User Agent strings suck. Well, actually, they are useful - at least for statistics.

This way, browser usage on different websites can be monitored and interesting stats can be generated, just like I did some time ago here.

And there are good specs that describe how a user agent should look (defined in HTTP 1.0 and HTTP 1.1 RFCs) - but even those already state "Note: Some existing clients fail to restrict themselves to the product token syntax within the User-Agent field."

Yay - the spec already notes that clients don't comply with the spec. Nice.

But it's even worse: The spec notes for what purpose this HTTP header should be sent: "This is for statistical purposes, the tracing of protocol violations, and automated recognition of user agents for the sake of tailoring responses to avoid particular user agent limitations."

Over the years, many web site designers extended the "avoid particular user agent limitations" to "block any unknown client from accessing content" though - and with this, all the problems started.

Websites started to sniff for "Mozilla/" at the start of the UA string (which Netscape had) and gave only those clients access to their (most of the time) non-standards-compliant content that it somehow displayed, and they did this hard enough that Microsoft could not release their browser without the "Mozilla/" at the start of the string, unless they wanted to be blocked from content. And clearly they didn't. Following that, almost any decent browser had to use Netscape-style "Mozilla/" user agent strings, even if they weren't the mythical "Mosaic killer" that this internal code name stood for.

When the Mozilla project wanted to note a Gecko version, and later the Firefox name and version, they were forced to hold on to the more and more useless "Mozilla/" prefix and some syntax surrounding it, and just add additional product tokens, ending up with a standards-compliant, but a bit lengthy convention for Mozilla user agents - which also SeaMonkey is using.

Instead of a handy

When those website that use user agent sniffing would do it correctly, they would send W3C-compliant content to every unknown browser and only do small tweaks for those which don't comply correctly (along with explicit checks for only those versions that are known not to comply, still sending the W3C-compliant version for future releases that might fix this) - and we would never have ended up even at this stage.

But that's not even where the story ends, actually, it gets much worse:

When Gecko gained market share, browsers like Konqueror and Safari began adding "(like Gecko)" to their UA string to fool websites testing for "Gecko" in the UA string into letting them in when they would close out anything beyond MSIE and Gecko. And then, we arrived at the stage where Firefox gained a whole lot of market share and those crappy web designers decided to let "Firefox" into their websites, apparently not knowing that Gecko is Gecko and Firefox is "just" Gecko plus a nice UI (which is cool enough, actually). Because of that, there is a number of websites that don't work in non-Firefox Gecko browsers even if they could - that includes SeaMonkey, the Firefox development builds labeled e.g. "Minefield", and also Camino.

Instead of helping us all and the whole web with increasing the market share of non-Firefox Gecko browsers and making web designers aware of the problem and the easy solution (yes, the by far hardest part), the Camino team is now trying to win the prize for the suckiest UA string of them all by adding a "like Firefox" string to their UA. WTF?

If we're continuing on this path, every useful browser will have to add this, and probably a few other strings, in the future. I don't intend to support this or any project which decides to go down that road.

It would have been nice if one day I could have used

or something like that.

The new proposal sounds like I may end up with one in this style though:

Thanks to the Camino team!

This way, browser usage on different websites can be monitored and interesting stats can be generated, just like I did some time ago here.

And there are good specs that describe how a user agent should look (defined in HTTP 1.0 and HTTP 1.1 RFCs) - but even those already state "Note: Some existing clients fail to restrict themselves to the product token syntax within the User-Agent field."

Yay - the spec already notes that clients don't comply with the spec. Nice.

But it's even worse: The spec notes for what purpose this HTTP header should be sent: "This is for statistical purposes, the tracing of protocol violations, and automated recognition of user agents for the sake of tailoring responses to avoid particular user agent limitations."

Over the years, many web site designers extended the "avoid particular user agent limitations" to "block any unknown client from accessing content" though - and with this, all the problems started.

Websites started to sniff for "Mozilla/" at the start of the UA string (which Netscape had) and gave only those clients access to their (most of the time) non-standards-compliant content that it somehow displayed, and they did this hard enough that Microsoft could not release their browser without the "Mozilla/" at the start of the string, unless they wanted to be blocked from content. And clearly they didn't. Following that, almost any decent browser had to use Netscape-style "Mozilla/" user agent strings, even if they weren't the mythical "Mosaic killer" that this internal code name stood for.

When the Mozilla project wanted to note a Gecko version, and later the Firefox name and version, they were forced to hold on to the more and more useless "Mozilla/" prefix and some syntax surrounding it, and just add additional product tokens, ending up with a standards-compliant, but a bit lengthy convention for Mozilla user agents - which also SeaMonkey is using.

Instead of a handy

"SeaMonkey/1.1.2 (Windows NT 5.1; de-AT) Gecko/1.8.1.4" (which would tell all statistics-relevant data and have a common "Gecko" name and version to detect to "avoid particular user agent limitations" common to all Gecko products), we end up with "Mozilla/5.0 (Windows; U; Windows NT 5.1; de-AT; rv:1.8.1.4) Gecko/20070617 SeaMonkey/1.1.2" because of that legacy.When those website that use user agent sniffing would do it correctly, they would send W3C-compliant content to every unknown browser and only do small tweaks for those which don't comply correctly (along with explicit checks for only those versions that are known not to comply, still sending the W3C-compliant version for future releases that might fix this) - and we would never have ended up even at this stage.

But that's not even where the story ends, actually, it gets much worse:

When Gecko gained market share, browsers like Konqueror and Safari began adding "(like Gecko)" to their UA string to fool websites testing for "Gecko" in the UA string into letting them in when they would close out anything beyond MSIE and Gecko. And then, we arrived at the stage where Firefox gained a whole lot of market share and those crappy web designers decided to let "Firefox" into their websites, apparently not knowing that Gecko is Gecko and Firefox is "just" Gecko plus a nice UI (which is cool enough, actually). Because of that, there is a number of websites that don't work in non-Firefox Gecko browsers even if they could - that includes SeaMonkey, the Firefox development builds labeled e.g. "Minefield", and also Camino.

Instead of helping us all and the whole web with increasing the market share of non-Firefox Gecko browsers and making web designers aware of the problem and the easy solution (yes, the by far hardest part), the Camino team is now trying to win the prize for the suckiest UA string of them all by adding a "like Firefox" string to their UA. WTF?

If we're continuing on this path, every useful browser will have to add this, and probably a few other strings, in the future. I don't intend to support this or any project which decides to go down that road.

It would have been nice if one day I could have used

SeaMonkey/4.3 (Linux x86_64; de) Gecko/3.2or something like that.

The new proposal sounds like I may end up with one in this style though:

Mozilla/5.0 (X11 [like Windows]; U; Linux x86_64 [like Intel Mac OS X]; de [like en-US]; rv:3.2) Gecko/20100704 (like KHTML) SeaMonkey/4.3 (like Firefox) (like Safari) (like Opera) (like Netscape) (like MSIE) (like Mosaic)Thanks to the Camino team!

Von KaiRo, um 03:23 | Tags: Mozilla, UA String | 9 Kommentare | TrackBack: 1